In the realm of cryptography, the intricacies of encryption algorithms often resemble a labyrinth, beckoning both enthusiasts and professionals to traverse their depths. Among these algorithms lies the Triple Data Encryption Standard, commonly known as 3DES. This algorithm, a descendant of the original Data Encryption Standard (DES), has graced the digital landscape for decades. But a question lingers in the air, frantically waving its hand for attention: What block size does 3DES utilize, and why does it matter?

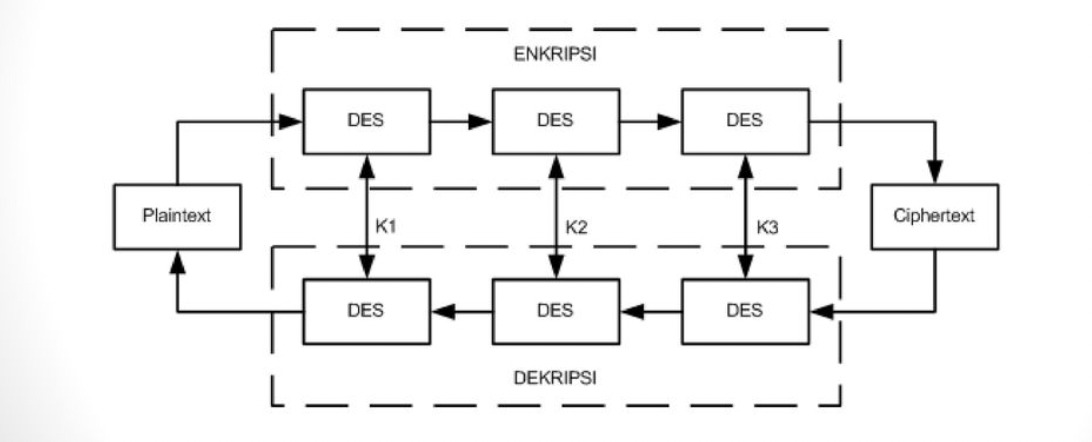

To properly decode this inquiry, one must first grasp the foundational elements of block ciphers. Block ciphers work by processing data in fixed-size blocks rather than converting it one bit at a time. The block size is critical in determining not just the security of the encryption but also the efficiency of the entire process. In the case of 3DES, the block size is defined as 64 bits.

Now, let’s pause for a moment to ponder: Is a 64-bit block size sufficient in today’s digital environment, or is it an archaic remnant of a bygone era? As the digital world evolves at an exponential rate, this question grows increasingly pertinent. The implications extend beyond theoretical discussions into tangible security practices.

3DES derives its name from the process it employs to enhance security: it applies the DES algorithm three times to each data block. While the original DES algorithm uses a relatively limited key size of 56 bits, 3DES improves upon this by utilizing key sizes of 112 or 168 bits, depending on whether two or three unique keys are employed. However, the 64-bit block size remains unchanged. This duality poses a challenge, especially when juxtaposed against modern encryption standards.

Understanding why block size matters necessitates a closer examination of its implications on security and performance. A smaller block size can render an algorithm vulnerable to various types of attacks, including the notorious birthday paradox. This paradox highlights that with smaller data sets, the likelihood of a collision—where two distinct inputs produce the same encrypted output—significantly increases. For an encryption algorithm, a higher occurrence of collisions can lead to breaches and vulnerabilities, exacerbating the efficacy of cryptographic attacks.

Moreover, the 64-bit block size of 3DES limits its ability to process larger data sets securely. As organizations increasingly handle vast amounts of data, expanding the encryption block becomes pivotal. Larger block sizes, such as the 128 bits utilized by the Advanced Encryption Standard (AES), effectively minimize collision risks and can accommodate larger plaintext structures with greater efficiency.

Is it surprising, then, that 3DES has gradually fallen out of favor in recent years? Its reliance on the antiquated DES framework, coupled with the minimal block size, presents a paradox of robust encryption versus vulnerability to modern attack vectors. As cybersecurity professionals strive to implement the most secure protocols, the inadequacies of older algorithms loom large.

With the arrival of more sophisticated attacks aiming to exploit the weaknesses of cryptographic systems, reliance on 3DES raises significant concerns. The National Institute of Standards and Technology (NIST) announced its intention to withdraw support for 3DES, categorizing its use as a less-than-optimal solution for securing sensitive information. The ramifications of this transition are profound, urging entities to reconsider their cryptographic frameworks.

Transitioning to an algorithm like AES, which utilizes larger block sizes and stronger security measures, is not without its challenges. While the benefits of enhanced security are undeniable, the shift often requires an overhaul of existing systems and processes. This necessitates considering interoperability, performance impact, and the potential disruption that may arise during the transition phase.

Nonetheless, the pivot towards more robust algorithms is vital. The evolution of threats in the digital landscape demands that cryptographic measures keep pace. It raises a provocative question: Are we willing to remain tethered to outdated methods, or shall we embrace a future where encryption is not just a necessity but a fortified shield against every conceivable threat? The choice is not merely theoretical; it reflects a fundamental responsibility owed to users, clients, and stakeholders reliant on the integrity of data.

This quest for modernity ushers in a period of introspection for organizations still employing 3DES. Evaluating existing encryption protocols and implementing more advanced alternatives requires a commitment to embedding security in every layer of operations. Such a shift not only fortifies defenses but also fosters confidence among users regarding their data’s security.

In conclusion, the significance of the block size within cryptographic algorithms cannot be overstated. For 3DES, the 64-bit block size is a double-edged sword—providing a semblance of security while simultaneously revealing vulnerabilities that could be detrimental in a landscape riddled with cyber threats. As the digital age matures, so too must our approaches to cybersecurity. The journey towards more secure algorithms is fraught with challenges, but the stakes have never been higher. In the grand tapestry of encryption, the understanding and adaptation of block size can enhance protection in our increasingly interconnected world.

Leave a Comment