In the realm of cryptography, the performance of algorithms is frequently scrutinized, particularly regarding their speed. As digital communication proliferates and data privacy becomes paramount, one asks: is there a reliable metric to compare the speed of cryptographic algorithms? Understanding this question necessitates a thorough exploration of the various factors influencing algorithm performance, measurement techniques, and the juxtaposition of speed against other critical parameters such as security and efficiency.

The primary intent of cryptographic algorithms—be it for encryption, hashing, or authentication—is to safeguard data integrity and confidentiality. However, these algorithms require computational resources, and their time complexity can significantly impact the efficiency of systems relying upon them. As such, quantifying speed becomes imperative not only for researchers but also for developers striving to implement robust security measures in their applications.

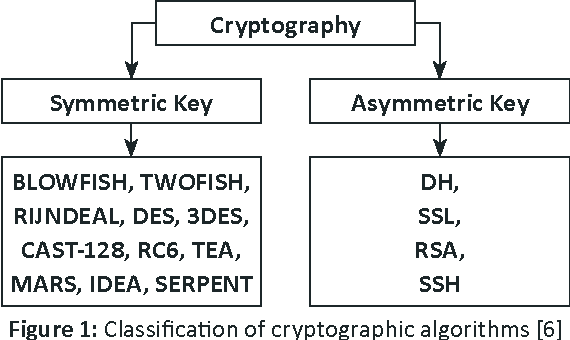

When attempting to analyze cryptographic algorithm speed, one must first consider the fundamental types of algorithms: symmetric key algorithms, asymmetric key algorithms, hash functions, and public key infrastructures. Each of these categories operates through distinct methodologies, which inherently affects their performance. Symmetric key algorithms, for instance, typically execute faster than their asymmetric counterparts due to their comparatively simpler operations. The effectiveness of such algorithms is often evaluated using the time taken to complete a defined task, alongside the number of cycles required by the processor for execution—a critical factor in the overall assessment of speed.

Benchmarks are foundational in establishing a comparability framework for algorithm performance. These benchmarks are standardized measures that assess speed under controlled conditions, providing a level playing field for diverse algorithms. A recurrent benchmark in cryptographic studies is the Execution Time metric, which gauges the duration an algorithm requires to encrypt or decrypt a fixed-size data input. This metric shines in scenarios involving large datasets, where the cumulative time can exponentially affect system performance. However, it is essential to utilize diverse data sizes in experiments, as performance may variably scale with input size, revealing complexities that a single data point cannot convey.

Another crucial metric to consider is Throughput, defined as the amount of data processed successfully over a given time interval. This measure is particularly salient for applications demanding high-volume data encryption or decryption, such as virtual private networks (VPNs) or secure file transfers. Throughput can reveal the practical limitations of an algorithm in real-world applications, shedding light on potential bottlenecks that may arise when handling massive data streams.

Latency is yet another vital metric in assessing the speed of cryptographic algorithms. Defined as the delay before the transfer of data begins following a request, latency can significantly undermine user experience, especially in time-sensitive applications such as secure online transactions. Algorithms exhibiting high latency can deter users from engaging with secure systems, underscoring the necessity for efficient implementation. Understanding the interrelation between latency and speed is pivotal for developing responsive applications that leverage cryptographic techniques.

In addition to these conventional metrics, Parallelism offers an innovative lens through which one can evaluate algorithmic speed—especially pertinent in an era characterized by multicore processors and cloud computing. Some cryptographic algorithms can execute operations concurrently, thereby significantly enhancing performance metrics when appropriately implemented. The scalability of an algorithm in distributed systems is, therefore, an additional property of merit, as it may enable a superior throughput compared to linear execution models.

However, one must approach the analysis of cryptographic speed with a critical lens. Speed is but one dimension of a multifaceted evaluation framework. Security is paramount; a swift algorithm that is susceptible to vulnerabilities or attacks may ultimately compromise data integrity, defeating its purpose. Furthermore, simplicity in an algorithm’s design can facilitate faster execution but may inadvertently introduce weaknesses. Hence, achieving a balance between performance and security is essential and often requires rigorous scrutiny by cryptographers and practitioners alike.

Additionally, the cryptographic community is continually advancing, with new algorithms emerging to address present deficiencies. Post-quantum cryptography is one such field; it targets the development of algorithms resistant to quantum computing threats. Although initial implementations may exhibit slower speeds compared to classical algorithms, the long-term security benefit warrants further exploration and investment in performance optimization strategies.

Moreover, the comparison of cryptographic algorithm speed loses utility without considering the context within which an algorithm operates. For instance, a lightweight algorithm may excel in resource-constrained environments such as IoT devices, whereas more substantial algorithms yielding higher security may be preferable in enterprise-level applications. Thus, contextual relevance plays a major role in determining the most appropriate choice, further complicating simplistic comparative analyses.

In summation, while there exists a plethora of metrics for comparing the speed of cryptographic algorithms—such as Execution Time, Throughput, Latency, and considerations of Parallelism—the inherent complexity of securing data necessitates a nuanced approach. The interplay of speed, security, and contextual application must be diligently examined to arrive at informed decisions. Ultimately, recognizing that no single metric offers a complete picture allows stakeholders in the cryptographic domain to pursue technologies that are not merely fast, but also resilient, adaptable, and secure.

Leave a Comment