In the realm of cryptography, hash functions serve as the custodians of data integrity, akin to how sentinels guard the fortresses of information. Embedded within the fabric of digital communications, these functions epitomize a blend of simplicity and complexity. Their deterministic nature, or the lack thereof, warrants a meticulous examination. The query, “Is a cryptographic hash always deterministic?” beckons exploration into the foundational principles governing such constructs.

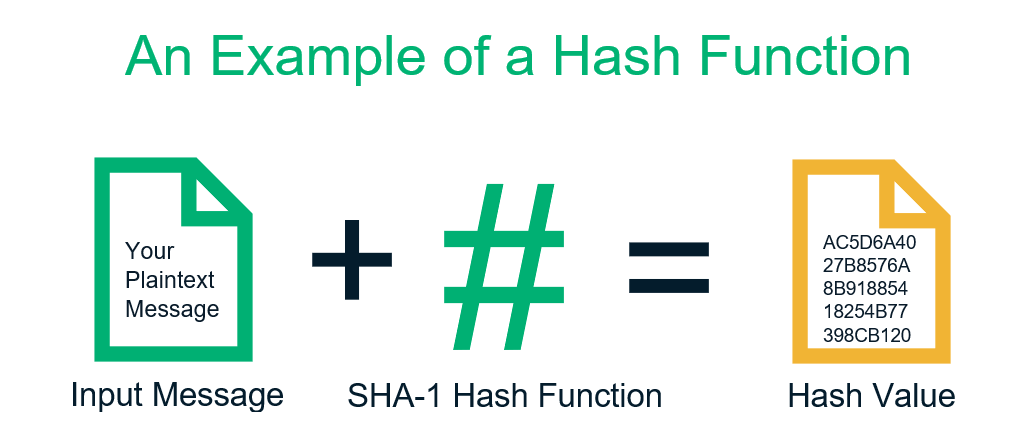

To comprehend the nuances of hash functions, one must first grasp the essence of determinism. A deterministic process yields the same output from the same input consistently, creating predictability in an otherwise volatile digital landscape. In the context of hash functions, this means that when the same set of data is subjected to a hashing algorithm, it should invariably produce an identical hash value. This property is critical for various applications, such as data integrity checks, digital signatures, and password storage.

Consider a master key in a medieval fortress, meticulously crafted to unlock a single door. Each time the key is used, it produces the same outcome—access to the coveted treasure within. Similarly, a deterministic hash function guarantees that the digital key to data—its hash—remains unchanged, fostering trust in the system’s integrity. However, the landscape of hashing is riddled with intricacies.

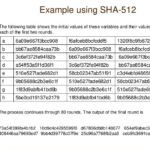

When exploring hash functions, one encounters different classes, including cryptographic hash functions like SHA-256 and non-cryptographic ones such as hash tables in computer science. The focus here remains on cryptographic variants, as they embed properties that elicit added assurance. Beyond determinism, a robust cryptographic hash function should exhibit uniqueness, collision resistance, and pre-image resistance. Irrespective of the nuances, the critical determinant remains: given identical inputs, such a hash function dutifully delivers congruent output.

Despite this underpinning of determinism, the term can lead to misunderstandings in the broader context of hashing algorithms. Analyzing a hash function reveals that while the output remains constant for a particular input, numerous inputs can yield identical hashes—a phenomenon known as a hash collision. An exemplary metaphor for this is a crowded marketplace where multiple vendors sell the same product under different brand names, permitting several paths to the same outcome.

Collisions hint at the collision resistance required in cryptographic contexts. A quality hash function minimizes the likelihood of two different inputs generating the same output, safeguarding data integrity. For instance, while SHA-256 operates deterministically, it’s also designed to avoid collisions, ensuring that even the smallest alteration in the input—a mere whitespace change—yields a vastly different hash. This relationship resembles a delicate ecosystem where each organism has its unique role, maintaining the harmony of the environment.

Moving forward, it’s essential to address the phenomena of data manipulation and the implications for determinism in hash functions. When malicious entities attempt to alter data—be it through substitution or more sophisticated means—deterministic hash functions prevent ambiguity. By generating an immutable fingerprint of original data, any subsequent transformations can be easily detected. The unwavering nature of these hashes acts as digital guardians, raising the alarm when disparate values emerge as the result of tampering, thus reestablishing the significance of a well-behaved hash function to its users.

However, the human element introduces a layer of complexity. As programmers design hash algorithms, occasional oversights may occur, affecting outputs under specific conditions. When insufficient attention is paid to the design parameters—or when a hash function is inadequately implemented—the deterministic property can be compromised. This imperfection cues us to an essential insight: while hash functions intrinsically serve as deterministic constructs, human intervention may inadvertently introduce variability into an otherwise unyielding framework.

Furthermore, the transition to quantum computing foreshadows unforeseen shifts in the cryptographic landscape, challenging the elemental properties of traditional hash functions. While deterministic characteristics remain a cornerstone for current algorithms, the advent of quantum capabilities may render even the strongest cryptographic functions susceptible to vulnerabilities. The landscape continues evolving, prompting rigorous inquiry into future-proofing hash technologies against emerging threats. Echoing the philosophical query of whether change is an intrinsic aspect of existence, one must wonder if the deterministic nature of hash functions holds steadfast in the face of revolutionary technological advancements.

In conclusion, the discourse surrounding the determinism of cryptographic hash functions, while rooted in foundational principles, merits continuous evaluation. These constructs are designed to be deterministic, ensuring that the same input consistently corresponds to the same output. Nevertheless, the intricacies of collision resistance, data manipulation, and external influences remind us that while the machinery behind hashing operates with diligence, the broader ecosystem is influenced by variables that could potentially alter outcomes. The interplay between determinism and the complexities of the digital domain underscores the necessity for vigilance and innovation in an ever-evolving technological landscape. As guardians of digital integrity, hash functions exemplify both the robustness of cryptographic security and the challenges posed by our dynamic world, forever prompting inquiry and exploration.

Leave a Comment