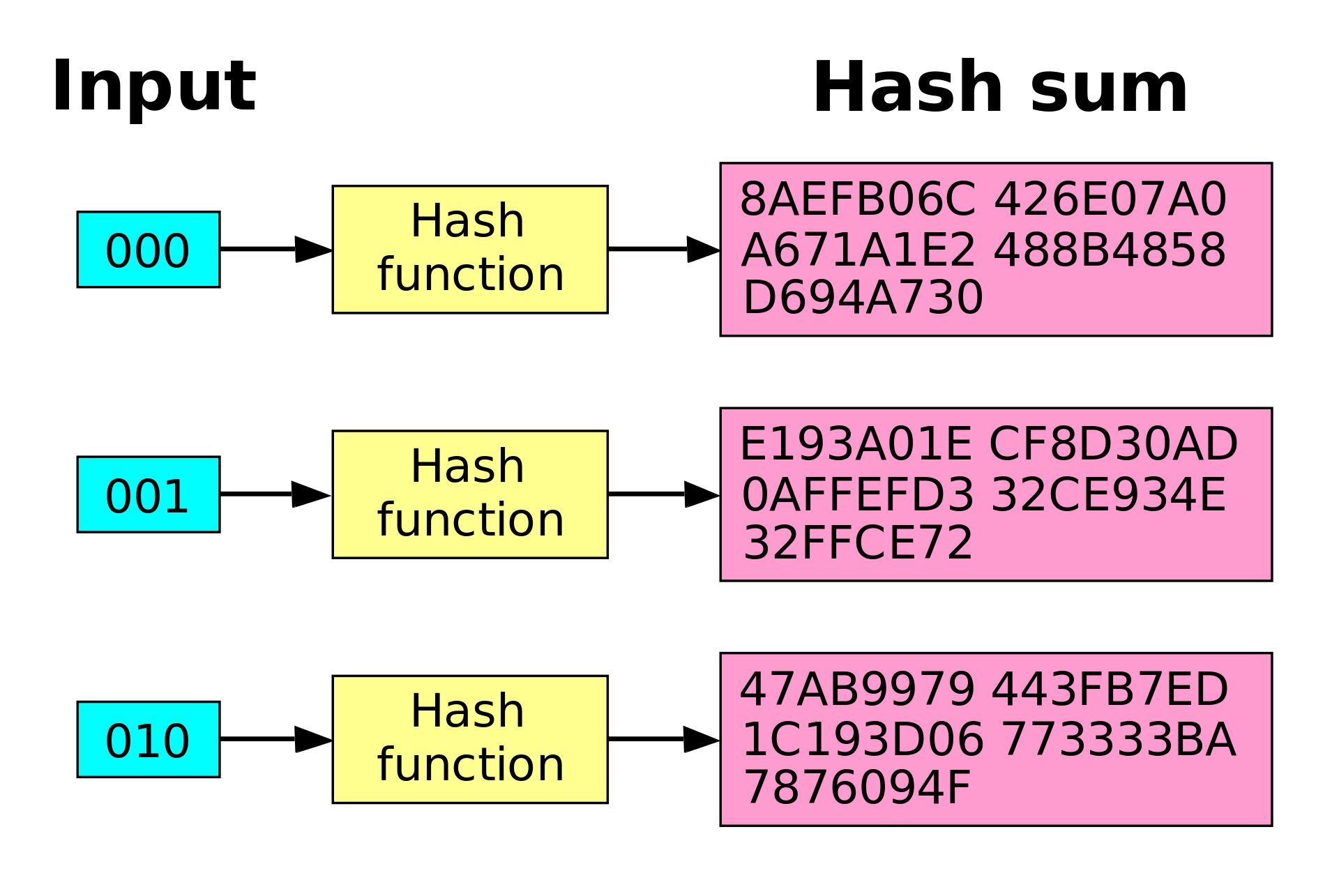

In the rapidly evolving arena of digital security, hashing functions wield significant power. These cryptographic tools serve multiple purposes, from data integrity verification to password storage. But how can one be certain that a hashing function is secure? Fortunately, there are six cardinal rules that, if adhered to, can help ensure the integrity and safety of any hashing application. But let’s pause for a moment to ponder: what happens if a hashing function fails to meet these criteria? The repercussions can be severe, resulting in compromised data, breaches, and loss of trust. Let’s delve into the golden rules that safeguard against these threats.

1. Irreversibility

The primary tenet of a secure hashing function is irreversibility. This means once data is transformed into a hash, it cannot feasibly be converted back to its original form. Imagine you had a key that could choke off an entire treasure trove of information. For cryptographic purposes, this becomes imperative. An attacker should be unable to revert the hash to unveil the original information. To test this rule, think about what would occur if a clever adversary could find a way to reverse-engineer a hash. This scenario could facilitate unauthorized access to sensitive data, making your hashing efforts futile.

2. Collision Resistance

Next, consider the concept of collision resistance. This principle posits that it should be computationally improbable for two distinct inputs to yield the same hash output. This is crucial because if two different datasets produce the same hash, an attacker can substitute one for the other without detection. It’s akin to two separate keys unlocking the same door, creating vulnerabilities. By adhering to this rule, one safeguards against the risible situation of increasing data redundancy, where the uniqueness of each piece of information is compromised. What if your data integrity relies on hashes that are susceptible to collision? That’s a recipe for disaster.

3. Uniform Distribution

A third rule of paramount significance is uniform distribution. A reliable hashing function should distribute output hashes uniformly across the output space. This means that even a minute change in the input data should result in a dramatically different hash output. In cryptographic terms, this is known as the ‘avalanche effect.’ Should the hash function produce similar hashes for similar inputs, it would greatly facilitate the work of an attacker in predicting and calculating hashes. Consider the challenge posed by a hashing function that lacks uniform distribution—an unwanted giveaway to potential intruders, making it easier for them to skim through potential passwords or sensitive data.

4. Fast Computation

While security is the cornerstone of a good hashing function, efficiency should not be sidelined. A hashing function must allow for fast computation, enabling rapid hashing of data without substantial delay. Delays can hamper user experience and functionality, particularly in systems that require high-speed access to cryptographic hashing. Nevertheless, the pursuit of speed must not overshadow security; thus, finding an equilibrium is essential. Imagine you deployed an exceedingly fast hashing function that forsook security. What advantage does such a function hold when it makes your system vulnerable?

5. Salting

Another crucial rule to consider is the implementation of salting—a method where random data is appended to an input before hashing. This transforms the final output, preventing attackers from using precomputed tables, or ‘rainbow tables,’ to crack passwords. Without a salt, identical inputs result in identical hashes, making it ludicrously simple for malicious actors to simplify their hacking efforts via brute force techniques. Envision a scenario where developers neglect salting; are they not essentially offering a buffet of passwords for attackers? By incorporating unique salts, hash outputs become more diverse, impervious to precomputational attacks.

6. Regular Reassessment

Lastly, a secure hashing function cannot merely be set and forgotten. Continuous evolution in cryptographic attacks necessitates regular reassessment of hashing algorithms and techniques. Advances in computational power and cryptanalysis may eventually render previously robust hashing functions obsolete. Therefore, a proactive approach—one that entails the conscientious evaluation of the adequacy and security of the hashing function—is indispensable. Contemplate the consequences of neglecting this fundamental rule. Are systems not inviting potential breaches through complacency?

In conclusion, the six golden rules serve as critical guardrails for the development and implementation of secure hashing functions. Each rule plays an intricate role in fortifying data against unauthorized access and ensuring the integrity of digital information. By adhering to the principles of irreversibility, collision resistance, uniform distribution, fast computation, salting, and regular reassessment, one can build resilient cryptographic systems that stand the test of time. Ultimately, the narrative surrounding hashing cannot be understated, as vigilance and diligence play pivotal roles in the realm of cybersecurity. So, it begs the question: is your current hashing function truly up to the task?

Leave a Comment