Imagine you’ve just downloaded a crucial file, one that contains sensitive information or valuable data that you absolutely cannot afford to lose. But how can you be certain that the file you received is identical to the one sent and that it hasn’t been tampered with or corrupted during its journey through the vast and unpredictable networks? This is where checksums enter the stage, performing a vital role in data integrity assurance.

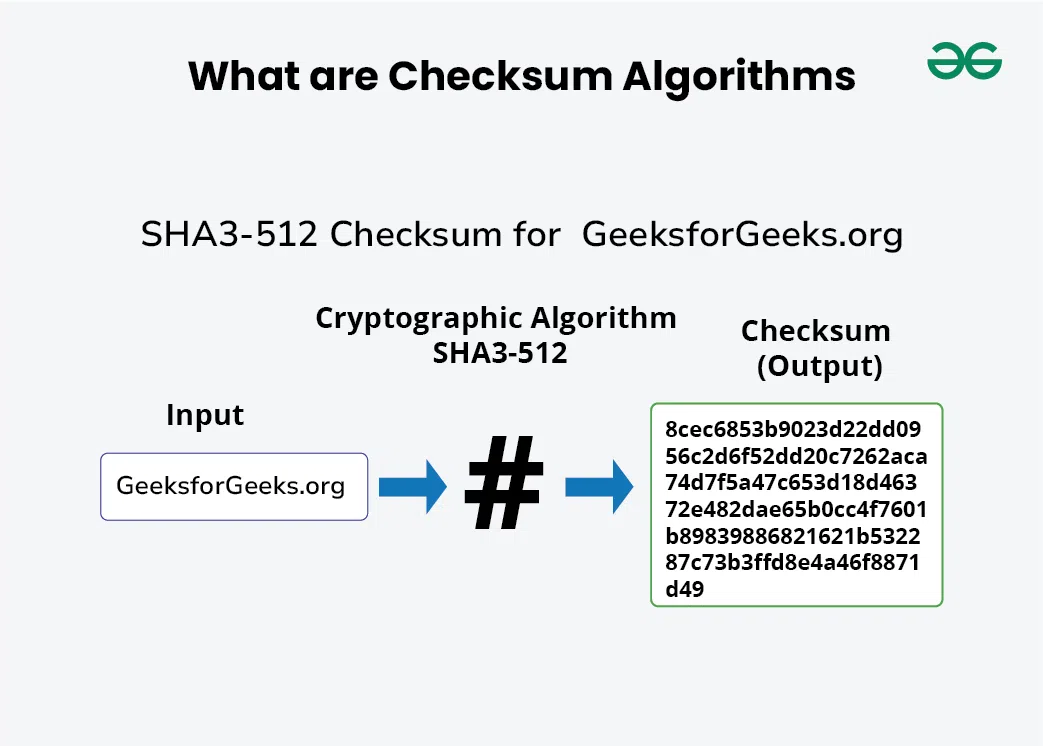

At its core, a checksum is a small-sized piece of data derived from a larger set of data. Think of it as a digital fingerprint that encapsulates the essence of that data. It’s computed using specific algorithms designed to convert the original data into a short, fixed-length string of characters or numbers. When the original data is modified in any way—intentionally or accidentally—the checksum will also change, acting as an indicator of corruption.

The purpose of employing checksums becomes strikingly clear when one examines the methodologies for detecting data corruption. A checksum is calculated using algorithms such as MD5, SHA-1, or SHA-256; these are cryptographic hash functions that produce a unique value for a given set of data. For example, when you download a file, the corresponding checksum is often provided by the sender. After the download, you can compute the checksum of the received file and compare it to the original. If both checksums match, the integrity of the data is verified; if not, it suggests a discrepancy.

So, what exactly can go wrong during data transmission that necessitates checksum verification? Data can suffer from corruption due to various reasons, including hardware malfunctions, software errors, or even external interference during transmission. Envision a scenario in which a file undergoes a corruptive impact, whether from packet loss in transmission or a hard disk failure. In any case, this can lead to sneaky modifications or outright data loss, potentially resulting in catastrophic outcomes if the data in question is sensitive or critical.

Herein lies the utility of checksums: they shine a light on the hidden dangers lurking in the dark corners of data transfer. A checksum’s role extends beyond mere error-checking. It also plays an integral part in cybersecurity, ensuring that data integrity is preserved against malicious activities, such as unauthorized modifications or injections. When data is altered by a malicious actor, the checksum will reveal this sabotage, thereby triggering alerts before any further damage can occur.

However, one must ponder: does this mean that checksums are flawless? In the grand scheme, no system is infallible. Attackers may devise methods to manipulate both the data and its corresponding checksum, thus bypassing standard verification measures. This reality necessitates a hybrid approach in data integrity assurance, incorporating not only checksums but also other techniques such as digital signatures and encryption to provide multiple layers of protection.

Moreover, the choice of checksum algorithm is paramount. Some algorithms exhibit vulnerabilities that could be exploited, leading to a false sense of security. For instance, the MD5 checksum, once widely used, has been shown to be vulnerable to collision attacks, where two different inputs produce the same checksum. Consequently, many professionals now advocate for algorithms like SHA-256, which offer greater resilience against such weaknesses.

Implementing a checksum strategy involves specific protocols. At its outset, a checksum is computed when the data is created—this is known as the initial or pre-transmission checksum. Afterward, during the process of data transmission or storage, different checksums can be generated at various checkpoints, verifying the data’s integrity at each stage. This ensures that even if a column of data becomes corrupted after multiple transfers, the initial source checksum always serves as a benchmark for comparison.

In real-world applications, checksums are omnipresent. From file downloads across the internet to verifying data integrity in databases, they are an indispensable component of modern digital communications. Software developers integrate checksum mechanisms into applications to ensure file authenticity, while systems engineers utilize them in backups for reliable data retrieval. Every time you browse an online store, upload documents to a cloud service, or even send important emails, software algorithms may be working tirelessly in the background, crafting checksums to detect and prevent potential data corruption.

In conclusion, checksums stand as sentinels in the realm of data integrity. They are pivotal in detecting erroneous changes that could distort the truth captured within digital files. However, while checksums are a formidable tool for identifying corruption, one must remain cognizant of their limitations. A combination of checksums with more robust security measures creates a multilayered defense against the intricacies of data corruption and malicious attacks. The digital landscape is rife with challenges, and as users become increasingly reliant on technology, understanding the mechanisms underpinning data integrity will enable more secure interactions. Therefore, the next time you press “download,” consider the journey your data is about to embark on and the invisible safeguards that will accompany it, including those vital checksums that stand guard against the sneaky specters of data corruption.

Leave a Comment